Paul and I have just presented our ‘lightning talk’ on the use of WordPress MU and Scriblio to create a platform for publishing multiple OPAC catalogues and then exposing the aggregate data as RDF using Triplify. I blogged about this idea a while back and this is the first presentation we’ve given. Not sure what people made of it. Too ambitious? Threatening? Confusing? All I know is that from where I’m standing, it would require a relatively small amount of funding to show it working in principle with a handful of library catalogues. The difficult part would be scaling it to work for 100+ catalogues (though bear in mind, wordpress.com hosts 6 million sites) and satisfying the politics of each institution. Still, that shouldn’t stop us from trying.

Category: Mashups

The Wire. Linking aggregated posts and comments

Philip Schmidt has developed a way to aggregate both posts and comments inline. Read more about it on Philip’s blog. Jim Groom’s posted about it, too. I have to run to a meeting soon, but I just wanted to show how you can take the Yahoo Pipes output and run it through feed2js to embed The Wire in just about any web page. Nice.

Using Google Reader as an OPML editor and feed blender

Last week, Google announced a new feature for Google Reader that is worth noting here, if only because it will make my work a little easier. They’ve introduced the idea of ‘bundles’ of feeds that anyone can create and share via Google Reader, email, OPML or as an Atom feed. There was a bit of confusion at first about what happened after you create a bundle and shared it. Dave Winer, based on an exchange with Kevin Marks, thought that the bundles were dynamic ‘reading lists’ based on a proprietary format. This isn’t the case but it’s worth reading Dave Winer’s original post with comments, and his follow up post which clarifies what reading lists are (technically, they’re ‘subscription lists‘ – part of the OPML 2.0 specification).

Anyway, what Google has introduced in this update to their feed reader is still very useful and functionally quite similar to the reading list concept. It allows me to group multiple feeds into a set/reading list/bundle and then share that set of feeds with my Google Reader ‘friends’, email a link to a web page of that bundle or download an OPML file of the bundle. This last feature is particularly cool because it means your bundle is portable via the OPML open standard and can be shared beyond Google Reader.

Effectively, Google Reader has become a simple OPML editor, allowing anyone to gather feeds together and export them as an OPML file. Even better, your bundle is also available as a ‘blended’ Atom feed, achieving something similar to Dave Winer’s notion of a dynamic ‘reading list’ where the creator of the bundle can add or remove feeds and the Atom feed is dynamically updated to reflect those changes. Until now it was a bit of a hassle to create a blended feed from multiple sources. Yahoo! Pipes is a powerful way of doing it but Pipes isn’t for everyone and I’ve found the feeds it produces are not always available and compatible with other feed reading applications. Recently, I’ve been creating ‘digests’ in feed.informer, but I’m more inclined to use Google Reader now as it’s where I do all my feed reading and I know the application well. Note that you don’t have to remain subscribed to the feed in Google Reader in order for a bundle to remain persistent either. You can create a bundle from feeds you later unsubscribe from in your reader and the feeds are not deleted from the bundle.

There are two obvious uses for all of this. First, a teacher could bundle a reading list of feeds and share them with students via Google Reader, as a simple web page, an OPML file or dynamic Atom feed. Second, using the Atom output, it’s now easy for anyone to create a lifestream feed of all their activity on the web and embed it on their web page or just archive it in Google Reader or elsewhere.

Scriblio, Triplify and XMPP PubSub

It occured to me this morning, as I woke from my slumber, that the work I’ve been doing recently with WordPress, could also be applied to a library catalogue using Scriblio.

Scriblio (formerly WPopac) is an award winning, free, open source CMS and OPAC with faceted searching and browsing features based on WordPress. Scriblio is a project of Plymouth State University, supported in part by the Andrew W. Mellon Foundation.

Which means that you can import your library catalogue into WordPress and the user can search for and retrieve a record for The Films of Jean-Luc Goddard. Have a look around Plymouth State’s Scriblio and you’ll get a good feel for what’s possible.

Anyway, taking Scriblio’s functionality for granted, you could easily add Triplify to the mix as I have discussed before. So with very little effort, you can convert your library catalogue to RDF N-Triples (and/or JSON). My questions to you Librarians is: knowing this is possible and fairly trivial to do, is there any value to you in exposing your OPACs in this way?

Next, as I lay listening to my daughter chat to her squeaky duck, I thought about the other stuff I’ve been looking at recently with WordPress. Once you think of your library catalogue as a WordPress site, there’s quite a lot of fun to be had. You could ramp up the feeds that you offer from your OPAC, use the OpenCalais API to add semantic tags, plugin some more semantic addons if you wish (autodiscovery of SIOC, FOAF, OAI-ORE data??), and, perhaps most fun of all, publish OPAC records in realtime over XMPP PubSub.

Which brings me to JISCPress, our recent #jiscri project proposal, which we may or may not get funded (what are we, a week or two away from finding out??). In that Project, we’re proposing a WordPress MU platform for publishing and discussing JISC funding calls and project reports (among other things). There’s a lot of cross-over between the above Scriblio ideas and JISCPress. So much so, that it’s probably no more than a days work to transform the JISCPress platform, hosted as an Amazon Machine Image, to a multi-user OPAC platform where, potentially, all UK University libraries, publish their OPACs via separate Scriblio sites.

You could then, like wordpress.com has done, publish an XMPP firehose from every catalogue over PubSub for search engines or whoever is interested in realtime data from UK university library catalogues. Alternatively, instead of the WPMU set up, each University library could maintain their own Scriblio install and publish an XMPP feed to an agreed server (though that approach seems like more hassle than is necesary if you ask me. You’re bound to have some libraries falling behind and not upgrading their sites as things develop. For less than a collective £4K/year, we could all buy into commercial support for a WPMU site from Automattic to help maintain server-side stuff).

I dunno. Maybe this is all off the wall, but the building blocks are all there. Is anyone experimenting with Scriblio in this way? Don’t tell me, a bunch of you have been doing it for years…

Getting your Triples into Talis Connected Commons

A few days ago, I wrote about adding Triplify to your web application. Specifically, I wrote about adding it to WordPress, but the same information can be applied to most web publishing platforms. Earlier this month, TALIS announced their Connected Commons platform and yesterday they announced a commercial version of their platform for the structured storage of Linked Data. Storage is all very well, but more importantly they have an API for developers, so that the data can be queried and creatively re-used or mashed up.

So this got me thinking about JISCPress, our recent JISC Rapid Innovation Programme bid, which proposes a WordPress Multi-User based platform for publishing JISC funding calls and the reports of funded projects. This is based on my experience of running WriteToReply with Tony Hirst.

Although a service for comment and discussion around documents, one of the things that interests me most about WriteToReply and, consequently the JISCPress proposal, is the cumulative storage of data on the platform and how that data might be used. No surprise really as my background is in archiving and collections management. As with the University of Lincoln blogs, WriteToReply and the proposed JISCPress platform, aggregate published content into a site-wide ‘tags’ site that allows anyone to search and browse through all content that has been published to the public. In the case of the university blogs, that’s a large percentage of blogs, but for WriteToReply and JISCPress, it would be pretty much every document hosted on the platform.

You can see from the WriteToReply tags site that over time, a rich store of public documents could be created for querying and re-use. The site design is a bit clunky right now but under the hood you’ll notice that you can search across the text of every document, browse by document type and by tag. The tags are created by publishing the content to OpenCalais, which returns a whole bunch of semantic keywords for each document section. You’ll also notice that an RSS feed is available for any search query, any category and any tag or combination of tags.

Last night, I was thinking about the WriteToReply site architecture (note that when I mention WriteToReply, it almost certainly applies to JISCPress, too – same technology, similar principles, different content). Currently, we categorise each document by document type so you’ll see ‘Consultations‘, ‘Action Plans‘ ‘Discussion Papers‘, etc.. We author all documents under the WriteToReply username, too and tag each document section both manually and via OpenCalais. However, there’s more that we could do, with little effort, to mark up the documents and I’ve started sketching it out.

You’ll see from the diagram that I’m thinking we should introduce location and subject categories. There will be formal classification schemes we could use. For example, I found a Local Government Classification Scheme, which provides some high level subjects that are the type of thing I’m thinking about. I’m not suggesting we start ‘cataloguing’ the documents, but simply borrow, at the top level, from recognised classification schemes that are used elsewhere. I’m also thinking that we should start creating a new author for each document and in the case of WriteToReply, the author would be the agency who issued the consultation, report, or whatever.

So following these changes, we would capture the following data (in bold), for example:

The Home Office created Protecting the public in a changing communications environment on April 27th which is a consultation document for England, Wales and Scotland, categorised under Information and communication technology with 18 sections.

Section one is tagged Governor, Home Department, Office of Public Sector Information, Secretary of State, Surrey.

Section two is tagged communications data, communications industry, emergency services, Home Secretary, Jacqui Smith MP, Rt Hon Jacqui Smith MP.

Section three is tagged Broadband, BT, communications, communications changes, communications data, communications data capability, communications data limits, communications environment, communications event, communications industry, communications networks, communications providers, communications service providers, communications services, emergency services, Her Majesty’s Revenue and Customs, Home Office, intelligence agencies, internet browsing, Internet Protocol, Internet Service, IP, mobile telephone system, physical networks, public telecommunications service, registered owner, Serious Organised Crime Agency, social networking, specified communications data, The communications industry, United Kingdom.

Section four is tagged …(you get the picture)

Section five, paragraph six, has the comment “fully compatible with the ECHR” is, of course, an assertion made by the government, about its own legislation. Has that assertion ever been tested in a court? authored by Owen Blacker on April 28th 11:32pm.

Selected text from Section five, paragraph eight, has the comment Over my dead body! authored by Mr Angry on April 28th 9:32pm

Note that every author, document, section, paragraph, text selection, category, tag, comment and comment author has a URI, Atom, RSS and RDF end point (actually, text selection and comment author feeds are forthcoming features).

Now, with this basic architecture mapped out, we might wonder what Triplify could add to this. I’ve already shown in my earlier post that, with little effort, it re-publishes data from a relational database as N-Triples semantic data, so everything you see above, could be published as RDF data (and JSON, too).

So, in my simple view of the world, we have a data source that requires very little effort to generate content for and manage (JISCPress/WriteToReply/WordPress), a method of automatically publishing the data for the semantic web (Triplify) and, with TALIS, an API for data storage, data access, query, and augmentation. As always, my mantra is ‘I am not a developer’, but from where I’m standing, this high-level ‘workflow’ seems reasonable.

The benefits for the JISC community would primarily be felt by using the JISCPress website, in a similar way (albeit with better, more informed design) to the WriteToReply ‘tags’ site. We could search across the full text of funding calls, browse the reports by author, categories and tags and grab news feeds from favourite authors, searches, tags or categories. This is all in addition to the comment, feedback and discussion features we’ve proposed, too. Further benefits would be had from ‘re-publishing’ the site content as semantic data to a platform such as TALIS. Not only could there be further Rapid Innovation projects which worked on this data, but it would be available for any member of the public to query and re-use, too. No longer would our final project reports, often the distillation of our research, sit idle as PDF files on institutional websites and in institutional repositories. If the documentation we produce it worth anything, then it’s worth re-publishing openly as semantic data.

Finally, in order to benefit from the (free) use of TALIS Connected Commons, the data being published needs to be licensed under a public domain or Creative Commons ‘zero’ licence. I suspect Crown Copyright is not compatible with either of these licenses, although why the hell public consultation documents couldn’t be licensed this way, I don’t know. Do you? For JISCPress, this would be a choice JISC could make. The alternative is to use the commercial TALIS platform or something similar.

As usual, tell me what you think… Thanks.

Triplify: Make your blog mashable

Last week, I wrote about how it is relatively simple to ‘pimp your ride on the semantic web‘. Over the weekend, I stumbled upon Triplify, a small ‘plugin’ for pretty much any web publishing platform, that “reveals the semantic structures encoded in relational databases by making database content available as RDF, JSON or Linked Data.” What is so appealing about Triplify is how easy it is to implement, especially alongside a WordPress site.

I can confirm that the three-step installation process is all it takes, although I wouldn’t undertake implementing this blindly as you are, literally, exposing a semantic representation of your database content. In other words, you should look at the configuration file you’re using and check that it’s going to expose the right data and not clear text passwords and unpublished posts and comments. Before I implemented it, I realised that it would expose comments on a bunch of posts that I have since made private (they were imported from an old, private blog), so I had to ‘unapprove’ those comments so the script didn’t expose them to the public. A five minute job. Alternatively, the script could probably be modified to work around my problem, by only exposing comments after a certain date, for example.

The end result is that, with a WordPress site, you expose a semantic representation of your users, posts, pages, tags, categories, comments and attachments in RDF (N-Triples) and JSON formatted data (for JSON, just add ‘?t-output=json’ to the end of the URI). Like I said though, it could be used on any database driven web application. Here’s what you get when you expose the high level links to your content:

<http://blog.josswinn.org/triplify/> <http://www.w3.org/2000/01/rdf-schema#comment> "Generated by Triplify V0.5 (http://Triplify.org)" . <http://blog.josswinn.org/triplify/> <http://creativecommons.org/ns#license> <http://creativecommons.org/licenses/by/2.0/uk/> . <http://blog.josswinn.org/triplify/post> <http://www.w3.org/1999/02/22-rdf-syntax-ns#type> <http://www.w3.org/2002/07/owl#Class> . <http://blog.josswinn.org/triplify/attachment> <http://www.w3.org/1999/02/22-rdf-syntax-ns#type> <http://www.w3.org/2002/07/owl#Class> . <http://blog.josswinn.org/triplify/tag> <http://www.w3.org/1999/02/22-rdf-syntax-ns#type> <http://www.w3.org/2002/07/owl#Class> . <http://blog.josswinn.org/triplify/category> <http://www.w3.org/1999/02/22-rdf-syntax-ns#type> <http://www.w3.org/2002/07/owl#Class> . <http://blog.josswinn.org/triplify/user> <http://www.w3.org/1999/02/22-rdf-syntax-ns#type> <http://www.w3.org/2002/07/owl#Class> . <http://blog.josswinn.org/triplify/comment> <http://www.w3.org/1999/02/22-rdf-syntax-ns#type> <http://www.w3.org/2002/07/owl#Class> .

Here’s an example of what you get when you expose the full content:

<http://blog.josswinn.org/triplify/post/154> <http://www.w3.org/1999/02/22-rdf-syntax-ns#type> <http://rdfs.org/sioc/ns#Post> . <http://blog.josswinn.org/triplify/post/154> <http://rdfs.org/sioc/ns#has_creator> <http://blog.josswinn.org/triplify/user/1> . <http://blog.josswinn.org/triplify/post/154> <http://purl.org/dc/terms/created> "2008-10-06T05:55:25"^^<http://www.w3.org/2001/XMLSchema#dateTime> . <http://blog.josswinn.org/triplify/post/154> <http://rdfs.org/sioc/ns#content> "Up early to go to Sheffield for LPI exams. The last week has left me underprepared. Never mind." . <http://blog.josswinn.org/triplify/post/154> <http://purl.org/dc/terms/modified> "2008-10-06T20:12:15"^^<http://www.w3.org/2001/XMLSchema#dateTime> . ... <http://blog.josswinn.org/triplify/post/154> <http://www.holygoat.co.uk/owl/redwood/0.1/tags/taggedWithTag> <http://blog.josswinn.org/triplify/tag/27> . ... <http://blog.josswinn.org/triplify/post/154> <http://www.holygoat.co.uk/owl/redwood/0.1/tags/taggedWithTag> <http://blog.josswinn.org/triplify/tag/41> . <http://blog.josswinn.org/triplify/post/154> <http://www.holygoat.co.uk/owl/redwood/0.1/tags/taggedWithTag> <http://blog.josswinn.org/triplify/tag/42> . ... <http://blog.josswinn.org/triplify/post/154> <http://sdp.iasi.rdsnet.ro/semantic-wordpress/vocabulary/belongsToCategory> <http://blog.josswinn.org/triplify/category/22> . ... <http://blog.josswinn.org/triplify/tag/154> <http://www.w3.org/1999/02/22-rdf-syntax-ns#type> <http://www.holygoat.co.uk/owl/redwood/0.1/tags/Tag> . <http://blog.josswinn.org/triplify/tag/154> <http://www.holygoat.co.uk/owl/redwood/0.1/tags/tagName> "valentine" .

You can choose to expose different levels of information in your HTML source. If you have more than a moderate amount of content, you’ll probably want to just expose the top level links as in the first example and let the users of your data dig deeper. You’ll also note that you can (and should) attach a license to your data.

A number of namespaces are recognised as well as a WordPress vocabulary.

$triplify['namespaces']=array( 'vocabulary'=>'http://sdp.iasi.rdsnet.ro/semantic-wordpress/vocabulary/', 'rdf'=>'http://www.w3.org/1999/02/22-rdf-syntax-ns#', 'rdfs'=>'http://www.w3.org/2000/01/rdf-schema#', 'owl'=>'http://www.w3.org/2002/07/owl#', 'foaf'=>'http://xmlns.com/foaf/0.1/', 'sioc'=>'http://rdfs.org/sioc/ns#', 'sioctypes'=>'http://rdfs.org/sioc/types#', 'dc'=>'http://purl.org/dc/elements/1.1/', 'dcterms'=>'http://purl.org/dc/terms/', 'skos'=>'http://www.w3.org/2004/02/skos/core#', 'tag'=>'http://www.holygoat.co.uk/owl/redwood/0.1/tags/', 'xsd'=>'http://www.w3.org/2001/XMLSchema#', 'update'=>'http://triplify.org/vocabulary/update#', );

So, what’s the point in doing this? Well, it’s fairly trivial and if you think that structured, linked, machine-readable licensed data is a Good Thing, why not? The Triplify website lists an number of advantages:

Such a triplification of your Web application has tremendous advantages:

- The installations of the Web application are better found and search engines can better evaluate the content.

- Different installations of the Web application can easily syndicate arbitrary content without the need to adopt interfaces, content representations or protocols, even when the content structures change.

- It is possible to create custom tailored search engines targeted at a certain niche. Imagine a search engine for products, which can be queried for digital cameras with high resolution and large zoom.

Ultimately, a triplification will counteract the centralization we faced through Google, YouTube and Facebook and lead to an increased democratization of the Web

The vision of the semantic web and semantic publishing is one of meaningfully identifying objects (and people) on the Internet and showing their relationships. This should improve searches for things on the web, but also improve how we exchange knowledge, re-use information and help clarify our identity on the web, too. It’s an ambitious task, but made easier with tools like Triplify. The semantic web also raises questions over individual privacy and, if data is well formed and accessible, it may be easier to control and therefore censor. The creator of Triplify recently gave a technical presentation on Triplify and how it is being used to publish data collected by the OpenStreetMap project. It shows how geodata exposed in this way can result in mashup applications that directly benefit you and me.

Addicted to feeds

I’ve been a long time consumer of news feeds and spend a lot of time reading the web via 200+ feeds in Google Reader. More recently, and largely as a result of working on WriteToReply, I’ve become just as addicted to publishing feeds from any data end point I can find.

WordPress makes this quite easy for developers, providing a whole load of functions and template tags for feeds. For the rest of us, there’s also documentation which is useful is you’re wondering what kinds of feeds can be generated from a basic WordPress site.

All the examples below, assume you’re using ‘pretty URLs’. If your URLs are something like http://example.com/?p=123 then the same principles apply but you’ll use the format /?feed=feed_type i.e. http://example.com/?feed=rss2 The documentation shows full examples.

So, here are the basic content feeds. RSS is RSS version 0.92 and RDF is RSS version 1.0, if you were wondering.

http://example.com/feed/

http://example.com/feed/rss/

http://example.com/feed/rss2/

http://example.com/feed/rdf/

http://example.com/feed/atom/

It’s also pretty straightforward to create a feed from a category or tag

http://example.com/category/my_category/feed/

http://example.com/tag/my_tag/feed/

You can also create feeds from combined tags

http://example.com/tag/tag1+tag2+tag3/feed/

And we know that a feed is available for site comments

http://example.com/comments/feed/

and it’s simple to grab a feed of comments from a single post by appending /feed/ to the end of the post permalink.

http://example.com/2009/01/01/my-latest-post/feed

You can also create a feed of a single post itself, by appending '?withoutcomments=1' to the end of the URL

http://example.com/2009/01/01/my-latest-post/feed/?withoutcomments=1

There is a feed for each author of the blog

http://example.com/author/joss/feed

but alas, as far as I know, no feed for the comments by any particular person.

You can also do something fancy with dates

http://example.com/2009/feed

http://example.com/2009/01/feed

http://example.com/2009/01/15/feed

and one of my favourite types of feed is from a search

http://example.com/?s=search_term&feed=rss2

Now all of this is well and good, but how many readers are going to know or care about constructing the various types of feeds available? Fortunately, it’s possible to make many of these feeds auto-discoverable either by adding some simple code to your theme’s header.php or installing a plugin.

By default, two feeds are auto-discoverable on your WordPress site: An atom and rss2 feed of your posts.

By using the Extra Feed Links plugin, you can make your comments, category, tag, author and search feeds autodiscoverable.

It’s also got a useful template tag that allows you to show the feed links in your theme, making the discovery of feeds even easier. I created a simple widget for the plugin to display the feed and an RSS icon in the sidebar

Here’s the code. Let me know where it could be improved as I just hacked it together from looking at other widgets.

<?php

function widget_extrafeeds_register() {

function widget_extrafeeds($args) {

extract($args);

?>

<br />

<?php echo $before_widget;

echo $before_title;

echo $widget_name;

echo $after_title; ?>

<ul class="sidebarList">

<?php extra_feed_link(); ?> <?php extra_feed_link('http://path/to/your/feed/icon/feed.png'); ?>

</ul>

<?php echo $after_widget; ?>

<?php

}

register_sidebar_widget('Extra Feeds',

'widget_extrafeeds');

register_sidebar_widget('Extra Feeds','widget_extrafeeds');}

add_action('init', widget_extrafeeds_register);

?>

To get this to work with the plugin, you need to add this to the very bottom of the plugin’s main.php file

// widget support require(dirname(__FILE__) . '/widget.php');

Like I said, if anyone can improve on this, do let me know. Also note that you’ll need to point the URL in the widget to a feed icon. A lot of themes include them in their /images/ directory, which makes it easy.

By using the widget or template tag, you can have these appearing on the relevant pages.

![]()

Try it by using http://writetoreply.org/tags 🙂

Try it by using http://writetoreply.org/tags 🙂

If you’re interested in how to add category and tag auto-discovery feeds to your theme’s source code, try adding this to your header.php

<?php if (is_category()) { ?>

<link rel="alternate" type="application/atom+xml" title="<?php bloginfo('name'); ?> &raquo; <?php single_cat_title(''); ?> Atom Feed" href="<?php echo

get_category_feed_link(get_query_var('cat'), 'atom'); ?>" />

<?php } ?>

<?php if (is_tag()) { ?>

<link rel="alternate" type="application/atom+xml" title="<?php bloginfo('name'); ?> &raquo; <?php single_tag_title(''); ?> Atom Feed" href="<?php echo

get_tag_feed_link(get_query_var('tag_id'), 'atom'); ?>" />

<?php } ?>

<?php if (is_category()) { ?>

<link rel="alternate" type="application/rss+xml" title="<?php bloginfo('name'); ?> &raquo; <?php single_cat_title(''); ?> RSS2 Feed" href="<?php echo

get_category_feed_link(get_query_var('cat'), 'rss2'); ?>" />

<?php } ?>

<?php if (is_tag()) { ?>

<link rel="alternate" type="application/rss+xml" title="<?php bloginfo('name'); ?> &raquo; <?php single_tag_title(''); ?> RSS2 Feed" href="<?php echo

get_tag_feed_link(get_query_var('tag_id'), 'rss2'); ?>" />

<?php } ?>

<?php if (is_category()) { ?>

<link rel="alternate" type="application/rdf+xml" title="<?php bloginfo('name'); ?> &raquo; <?php single_cat_title(''); ?> as RDF data" href="<?php echo

get_category_feed_link(get_query_var('cat'), 'rdf'); ?>" />

<?php } ?>

<?php if (is_tag()) { ?>

<link rel="alternate" type="application/rdf+xml" title="<?php bloginfo('name'); ?> &raquo; <?php single_tag_title(''); ?> as RDF data" href="<?php echo

get_tag_feed_link(get_query_var('tag_id'), 'rdf'); ?>" />

<?php } ?>

I learned this from the author of this related plugin, which is similar but not quite as powerful as the Extra Feed Links plugin.

Finally, if you use FeedBurner, beware that it breaks some of the above feeds. One fix for ensuring that tag and category feeds continue to work as they should is to modify the FeedBurnerFeedSmith plugin as noted here. Simply change the line

is_feed() && $feed != 'comments-rss2' && !is_single() &&

to read

is_feed() && $feed != 'comments-rss2' && !is_single() && !is_tag() &&

That’ll do for now. I intend to learn more about the RSS and Atom specifications over the next few weeks and will post anything I think relevant here. If you can add anything to this post, please do leave a comment. Thanks.

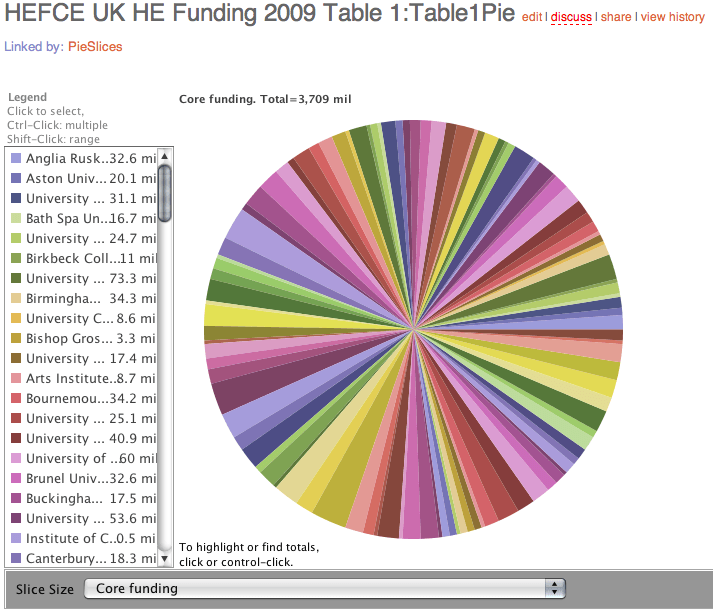

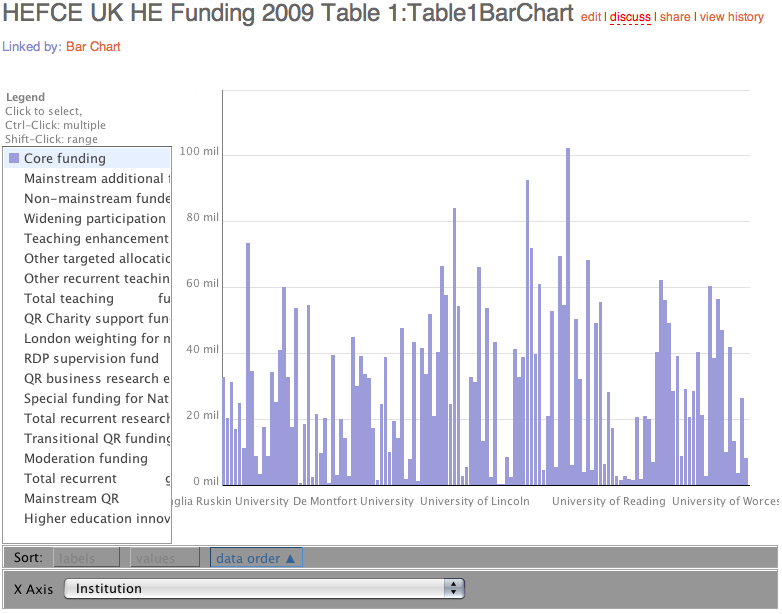

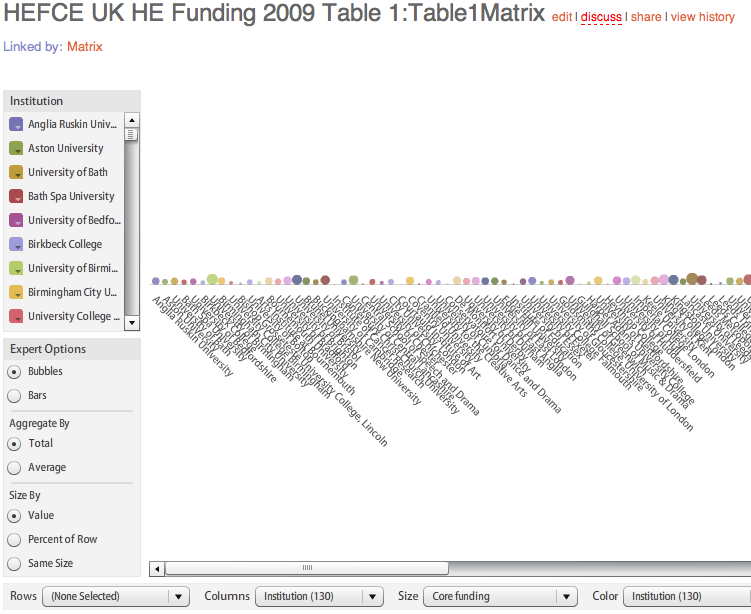

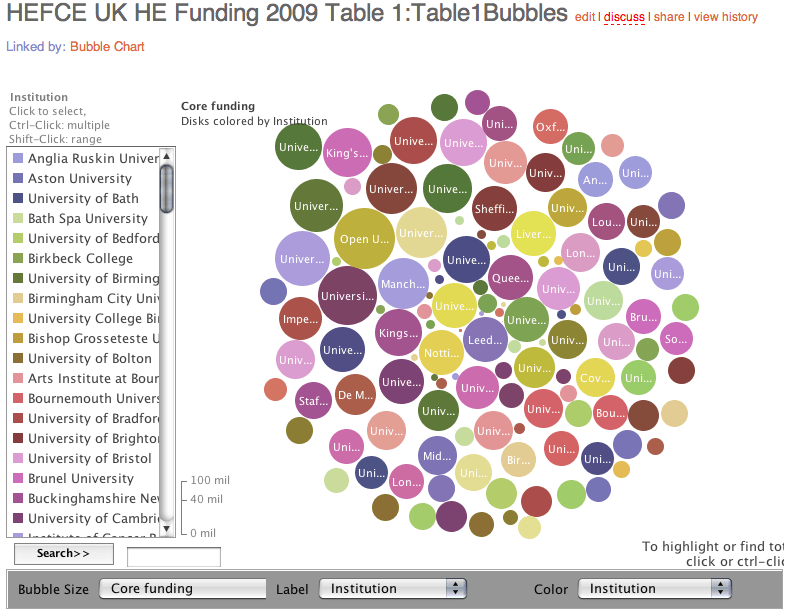

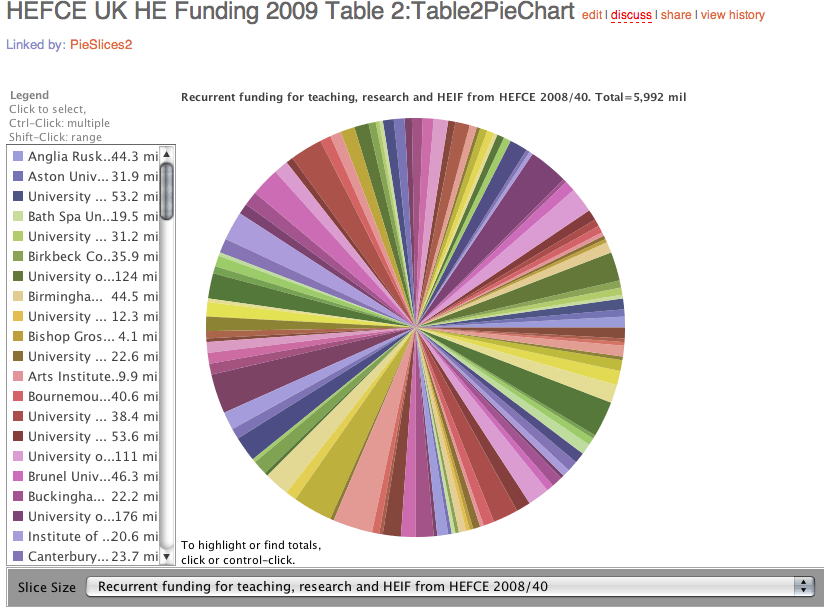

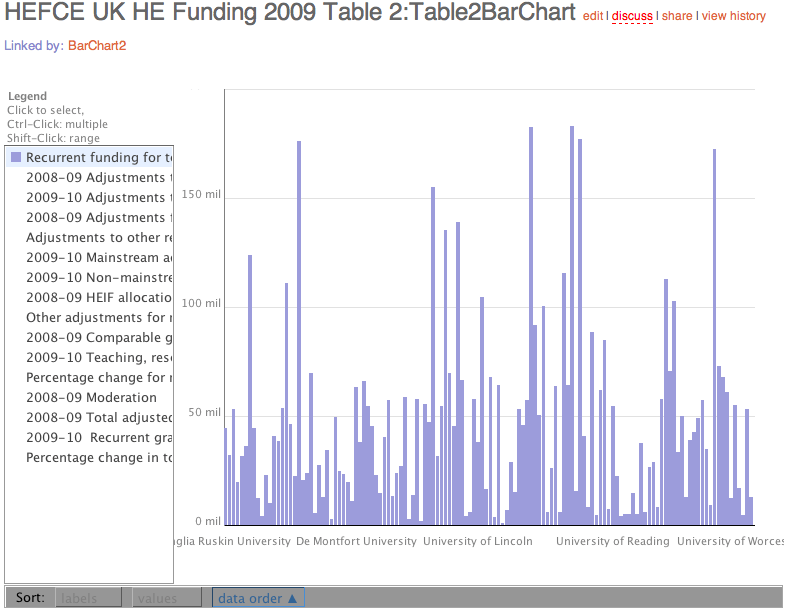

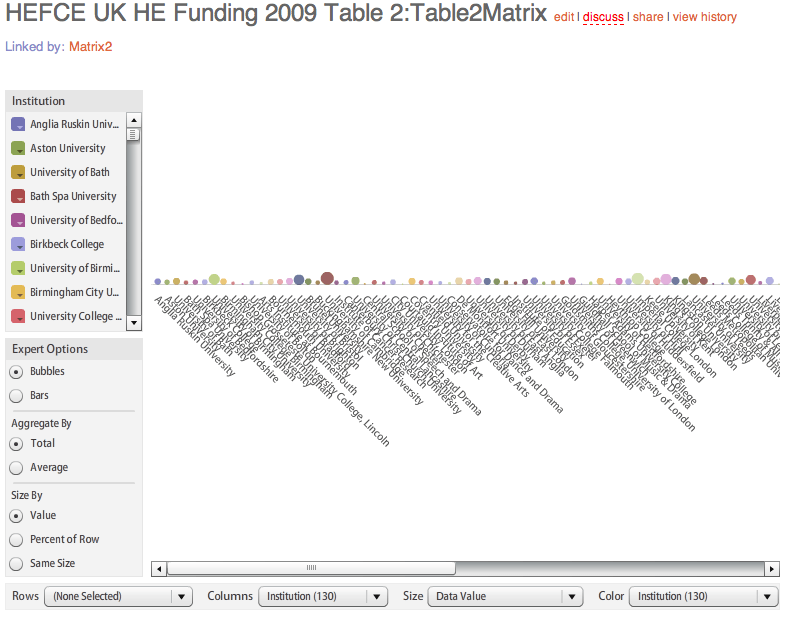

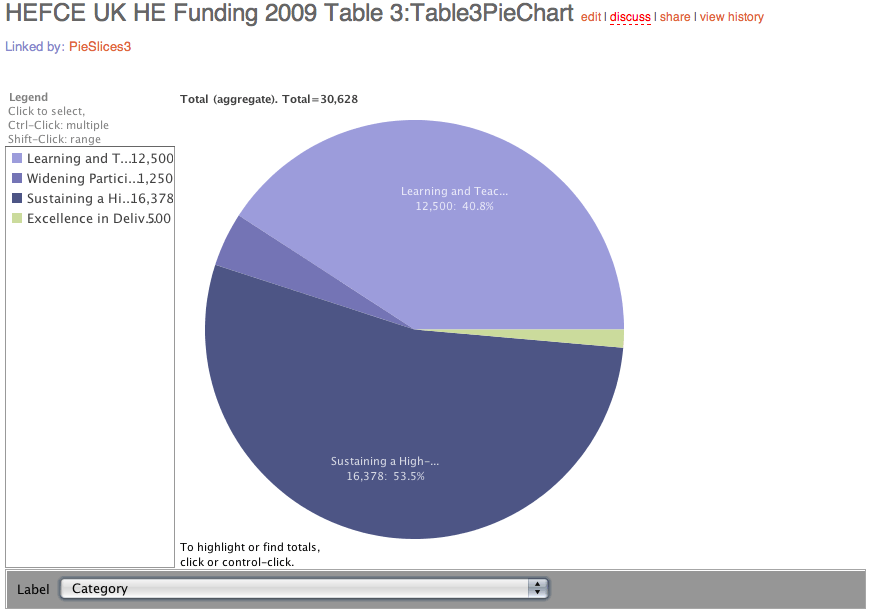

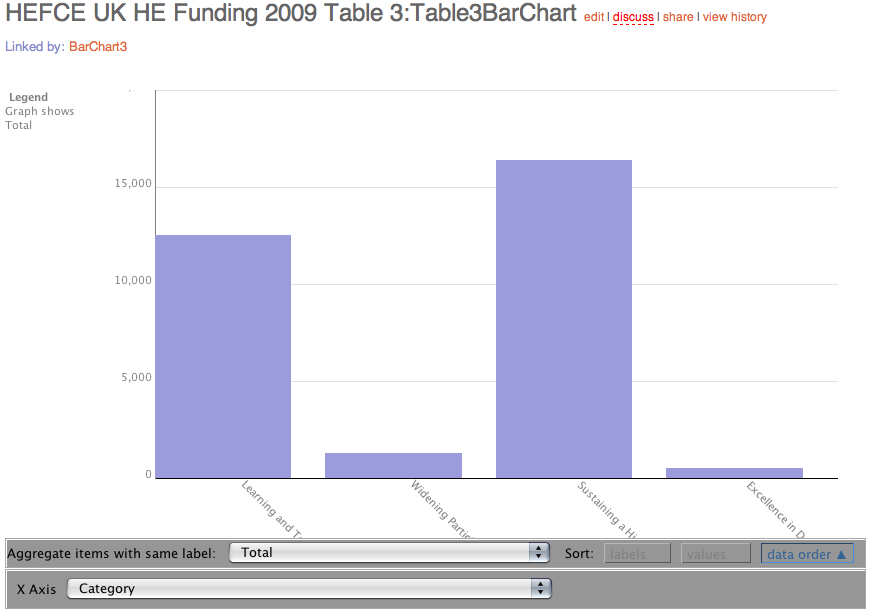

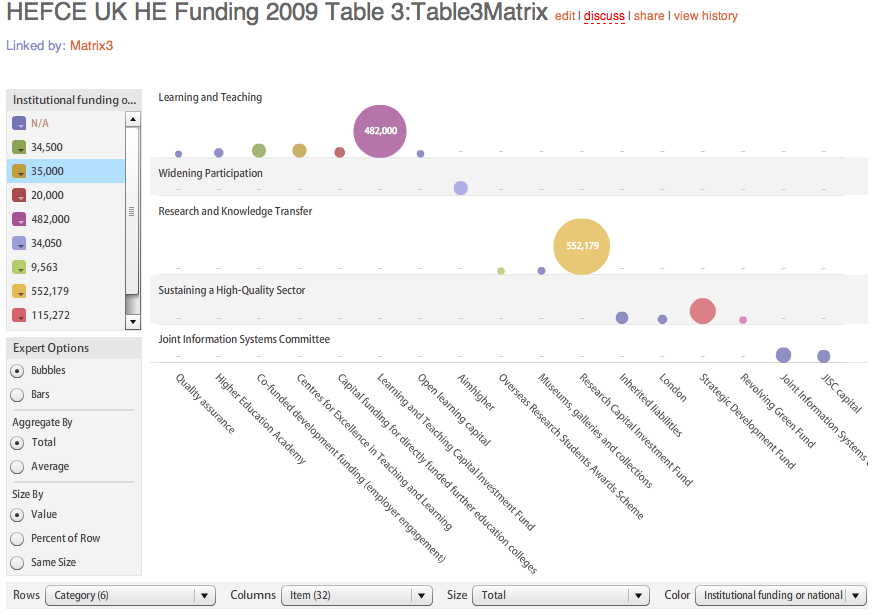

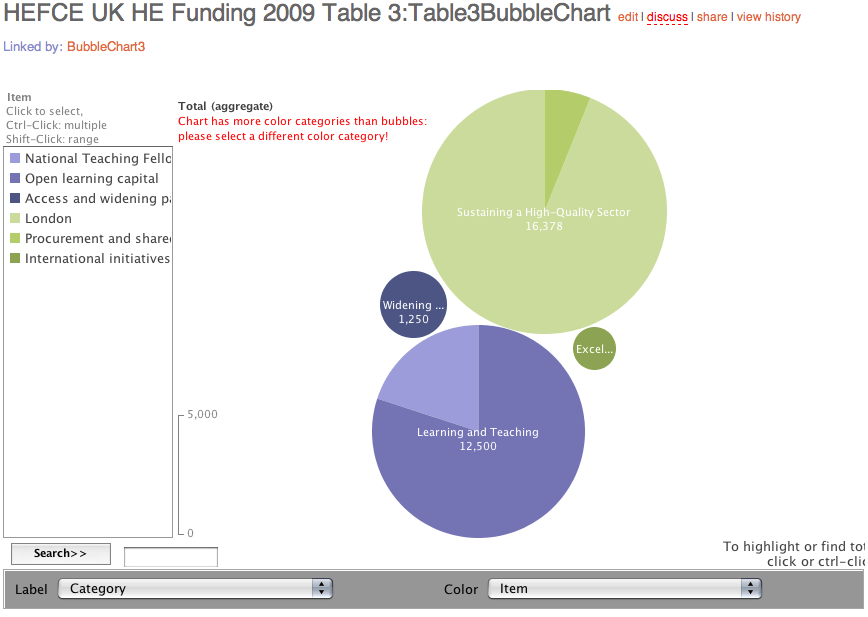

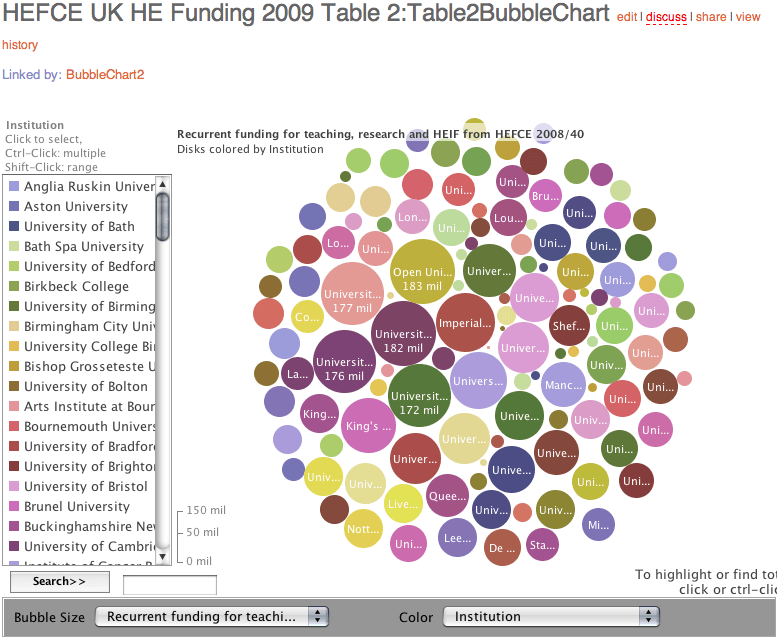

HEFCE HE Grant Allocations 2009-10 Visualised

In our weekly team meeting, I mentioned that I’d created some visualisations of the RAE research funding allocations. I also mentioned that Tony Hirst had previously done the same for the HEFCE teaching funding allocations. I offered to send everyone links to these, but before do so, I thought I’d have a go at re-creating the HEFCE visualisations myself to get a bit more practice in with IBM’s Many Eyes Wikified. So this is a companion piece to my previous post. All credit to Tony for opening my eyes to this stuff.

So, HEFCE have announced the 2009/10 grant allocations for UK Higher and Further Education institutions and provided full spreadsheets of the figures. I’ve imported the data into Google Spreadsheets and made the three tables publicly accessible as CSV files (1), (2), (3). Note that I’ve stripped out all data relating to FE grant allocations, which is included in the original spreadsheets.

Next, I’ve imported the CSV files into IBM’s Many Eyes Wikified (1), (2), (3), and these wikified tables are now the data sources for the following visualisations.

Recurrent grant for academic year 2009-10

The Pie

Bar Chart

Matrix

Bubble Chart

Comparison with 2008-09 academic year recurrent grant

The Pie

Non-recurrent funding for 2009-10

Non-recurrent funding for 2009-10

The Pie

Bar Chart

Matrix