I am in Venice to present a paper with two colleagues from the School of Architecture, at a two-day conference organised by the Metadata for Architectural Materials in Europe (MACE) Project. Yesterday was a significant day, for reasons I want to detail below. Skip to the end of this long post, if you just want to know the outcome and why this conference has been an important and positive turning point in the Virtual Studio project.

I joined the university just over a year ago to work on the JISC-funded LIROLEM Project:

The Project aimed to lay the groundwork for the establishment of an Institutional Repository that supports a wide variety of non-textual materials, e.g. digital animations of 3-D models, architectural documentation such as technical briefings and photographs, as well as supporting text based materials. The project arose out of the coincidental demands for the University to develop a repository of its research outputs, and a specific project in the school of Architecture to develop a “Virtual Studio”, a web based teaching resource for the school of Architecture.

At the end of the JISC-funded period, I wrote a lengthy summary on the project blog, offering a personal overview of our achievements and challenges during the course of the project. Notably, I wrote:

The LIROLEM Project was tied to a Teaching Fellowship application by two members of staff in the School of Architecture. Their intentions were, and still are, to develop a Virtual Studio which compliments the physical design Studio. Although the repository/archive functionality is central to the requirements of the Virtual Studio, rather than being the primary focus of the Studio, a ‘designerly’, dynamic user interface that encourages participation and collaboration is really key to the success of the Studio as a place for critical thinking and working. In effect, the actual repository should be invisible to the Architect who has little interest, patience or time for the publishing workflow that EPrints requires. More often that not, the Architects were talking about wiki-like functionality, that allowed people to rapidly generate new Studio spaces, invite collaboration, bring in multimedia objects such as plans, images and models, offer comment, discussion and critique. As student projects developed in the Virtual Studio, finished products could be archived and showcased inviting another round of comment, critique and possibly derivative works from a wider community outside the classroom Studio.

Our conference paper discussed the difficulties of ensuring that the (minority) interests of the Architecture staff were met while trying to gain widespread institutional support and sustainability for the Institutional Repository which the LIROLEM project aimed, and had an obligation, to achieve. During the presentation (below), we asked:

Can academics and students working in different disciplines be easily accommodated within the same archival space?

Our presentation slides. My bicycle is a reference to Bijker (1997)

The paper argues that advances in technology result from complex and often conflicting social interests. Within the context of the LIROLEM Project, it was the wider interests of the Institution which took precedence, rather than the minority interests of the Architectural staff. I’m not directing criticism towards decisions made during the project; after all, I made many of them so as to ensure the long-term sustainability of the repository, but yesterday we argued that

architecture is an atypical discipline; its emphasis is more visual than literary, more practice than research-based and its approach to teaching and learning is more fluid and varied than either the sciences or the humanities (Stevens, 1998). If we accept that it is social interests that underlie the development of technology rather than any inevitable or rational progress (Bijker, 1997), the question arises as to what extent an institutional repository can reconcile architectural interests with the interests of other disciplines. Architecture and the design disciplines are marginal actors in the debate surrounding digital archive development, this paper argues, and they bring problems to the table that are not easily resolved given available software and that lie outside the interests of most other actors in academia.

Prior to the conference, I was unsure of what to do next about the Virtual Studio. I felt that the repository was the wrong application for supporting a collaborative studio environment for architects. Central to this was the unappealing deposit and cataloguing workflow in the IR and the general aesthetic of the user interface which, despite some customisation, does not appeal to designers’ expectations of a visual tool for the deposit and discovery of architectural materials.

However, the MACE Project appears to have just come to our rescue with the development of tools that query OAI-PMH data mapped to their LOM profile, enriches the harvested metadata (by using external services such as Google Maps and collecting user generated tags, for example) and provides a social platform for searching participating repositories. I managed to ask several questions throughout the day to clarify how the anticipated architectural content in our repository could be exposed to MACE. My main concern was our issue of having a general purpose Institutional Repository, but wanting to handle subject-specific (architecture) content in a unique way. I was told that the OAI-PMH has a ‘set‘ attribute which could be used to isolate the architectural content in the IR for harvesting by MACE. Another question related to the building of defined communities or groups within the larger MACE community (i.e. students on a specific course) and was told that this is a feature they intend to implement.

Because of the work of MACE, the development of a search interface and ‘studio’ community platform has largely been done for us (at least to the level of expectation we ever had for the project). Ironically, we came to the conference questioning the use of the IR as the repository for the Virtual Studio, but now believe that we may benefit from the interoperability of the IR, despite suffering some of its other less appealing attributes. One of the things that remains for us to do, is improve the deposit experience to ensure we collect content that can be exposed to the MACE platform.

For this, I hope we can develop a SWORD tool that simplifies the deposit process for staff and students, reducing the work flow process down to the two or three brief steps you find on Flickr or YouTube, repositories they are likely to be familar with and judge others against. User profile data could be collected from their LDAP login information and they would be asked to title, describe and tag their work. A default BY-NC-ND Creative Commons license would be chosen for them, which they could opt out of (but consequently also opt out of MACE harvesting, too).

Boris Müller, who works on the MACE project, spoke yesterday of the “joy of interacting with [software] interfaces.” This has clearly been a central concern of the MACE project as it has been for the Virtual Studio project, too. I’m looking forward to developing a simple but appealing interface that can bring at least a little joy to my architect colleagues and their students.

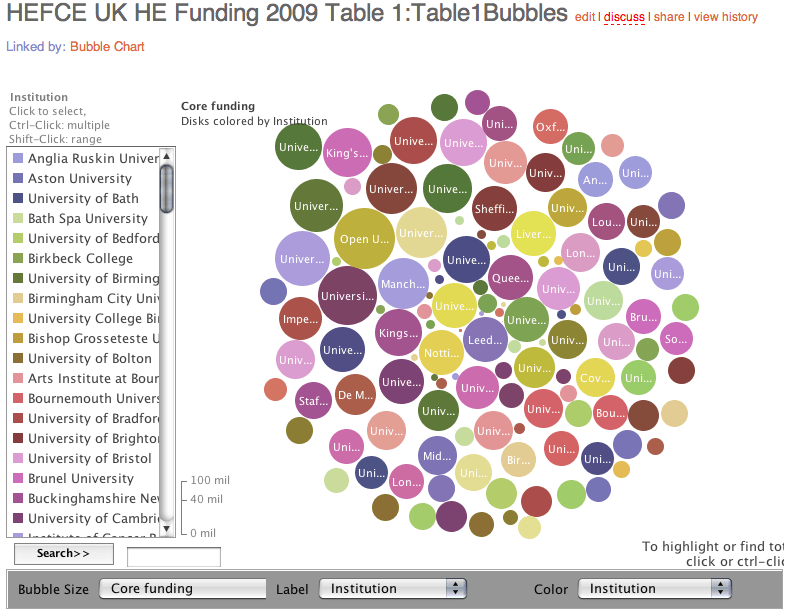

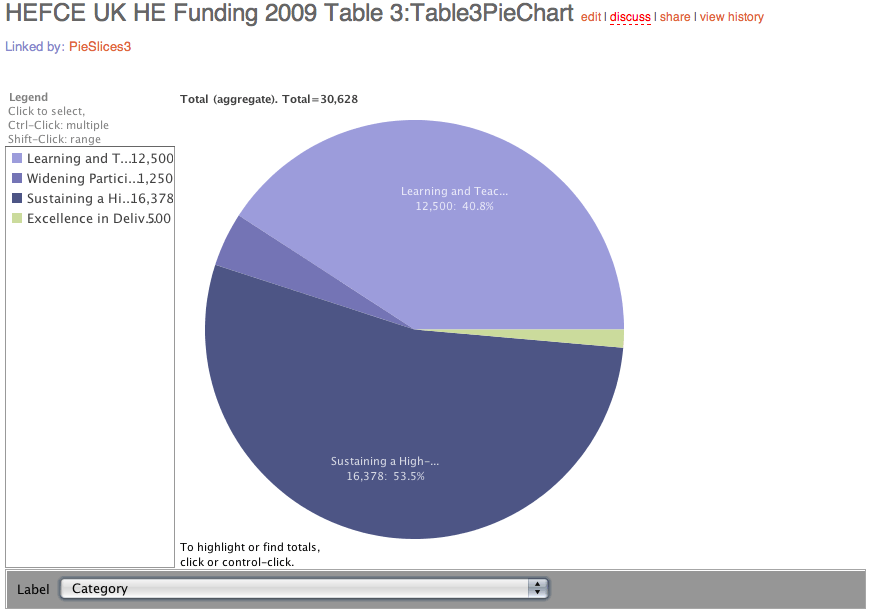

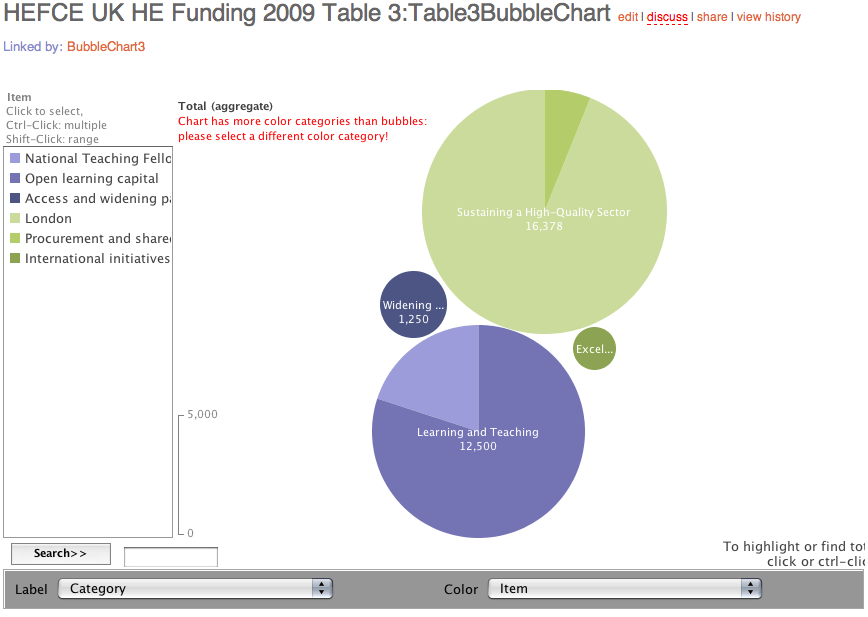

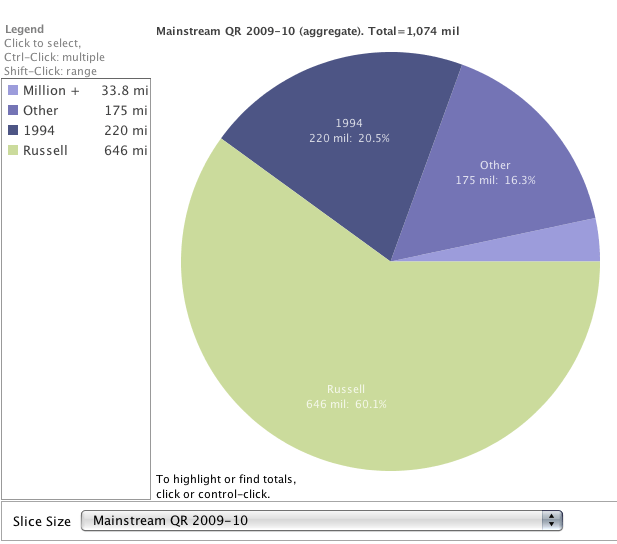

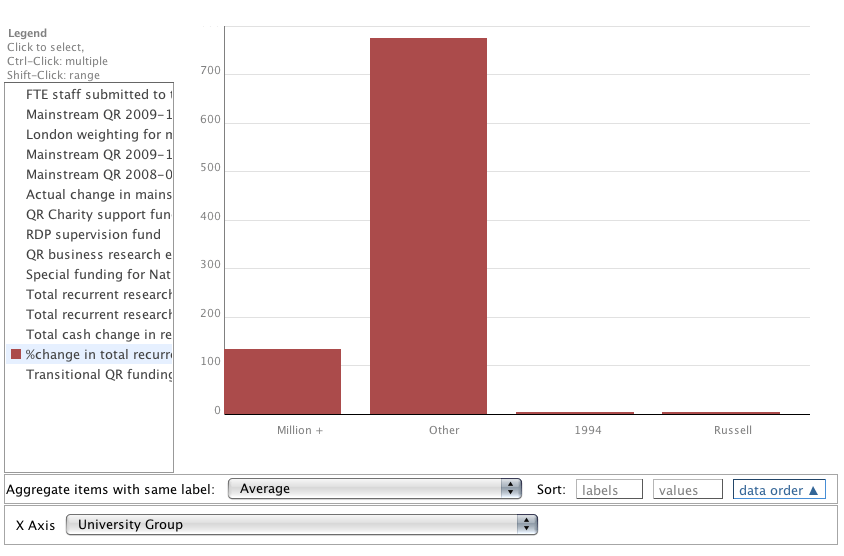

Non-recurrent funding for 2009-10

Non-recurrent funding for 2009-10